Introduction

CAP stands for Consistency, Availability and Partition tolerance. The CAP theorem first appeared in autumn 1998 and published as the CAP principle in 1999 also named Brewer’s theorem after computer scientist Eric Brewer states that it is impossible for a distributed data store to simultaneously provide more than two out of the following three guarantees:

- Consistency: Every read receives the most recent write or an error

- Availability: Every request receives a (non-error) response, without the guarantee that it contains the most recent write

- Partition tolerance: The system continues to operate despite an arbitrary number of messages being dropped (or delayed) by the network between nodes

Fallacies of Distributed Systems

The distributed systems are heavily dependent on various moving parts connected over a network and heavily rely on partitioning to achieve the scalability, and be informed that network failure is inevitable http://www.mypassionfor.net/2020/03/11/8-fallacies-of-distributed-computing/

Can a system have all CAP?

The only exception which can practically demonstrate all CAP would be a single node system, which can’t be regarded as a distributed system. Moreover, it can’t scale to the level of what a distributed system is capable of and designed with a goal in mind.

Note: Single node can utilize only Scale-Up or Vertical-Scaling strategy. Any system which is using Scale-Out or Horizontal-Scaling has to abide by the CAP theorem.

Explanation

No distributed system is safe from network failures, thus network partitioning has to be tolerated. In the presence of a partition, one is then left with two options: consistency or availability. When choosing consistency over availability, the system will return an error or a time out if particular information cannot be guaranteed to be up to date due to network partitioning.

When choosing availability over consistency, the system will always process the query and try to return the most recent available version of the information, even if it cannot guarantee it is up to date due to network partitioning (which is better in many cases for user experience). When a network connection is established, consistency will be achieved again across nodes/partitions i.e. eventually consistent.

Database systems designed with traditional ACID guarantees in mind such as RDBMS choose consistency over availability, whereas systems designed around the BASE philosophy, common in the NoSQL databases, for example, choose availability over consistency.

CAP in Practice

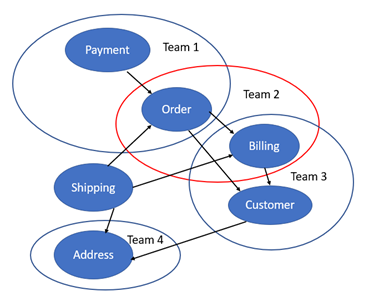

Let’s say you are designing a distributed system that has a cluster of 4 data nodes. Replication factor is 2 i.e. any data written in the cluster must be written on 2 nodes; so when one goes down – second can serve the data.

Now try to apply CAP theorem on this requirement. Remember, in a distributed system, two things may happen anytime i.e. node failure (hard disk crash, EC2/VM failure) or network failure (the connection between two nodes go down) [Fallacy of distributed computing].

CP [Consistency/Partition Tolerance] Systems

Distributed systems ensure data consistency at the time of reading the data, by a voting mechanism, where all nodes who have a copy of data mutually agree that they have “same copy” of requested data. Now let’s assume that requested data is present in two nodes A-1 and A-2. The client tries to read the data; and our CP system is partition tolerant as well, so an expected network failure occurred and A-1 is detected as down. Now the system cannot determine that A-2’s data copy is the latest or not; it may be stale as well. So the system decides to send an error to the client.

Here system chose to prefer data consistency over data availability. Similarly, at the time of writing the data if the replication factor is 2, then the system may reject write requests until it finds two healthy nodes to write data fully in consistent manner. Hence, now the system is considered not “Available” i.e CAP’s “A” is sacrificed.

CP system is harder and expensive to implement.

AP [Availability/Partition Tolerance] Systems

In today’s customer-obsessed mindset, a system is expected to up-and-running 24×7 hence, you have to put proper thinking in place and make informed decisions while choosing trade-offs. As with CP, the system is known not to be “Available” in the event of a failure.

A resilient system design would rather consider instead of sending ERROR (in case A-1 is down); it sends the data received from A-2. Chances are that the data client will get may not be the latest data (hard to decide). Hence, now the system is considered “Available” i.e CAP’s “C” is sacrificed.

CA [Consistency/Availability] Systems, Really?

In a distributed environment, we cannot avoid “P” of CAP. So we have to choose between CP or AP systems. If we desire to have a consistent and available system, then we must forget about partition tolerance and it’s possible only in non-distributed systems such as an RDBMS system.

Closing Note

CAP is frequently misunderstood as if one has to choose to abandon one of the three guarantees at all times. In fact, the choice is really between consistency and availability only when a network partition or failure happens; at all other times, no trade-off has to be made.

CAP is to choose your tradeoff in the event of a failure (network/node). In the absence of network failure – that is, when the distributed system is running normally – both availability and consistency can be satisfied.

In today’s world, we can achieve all 3 in a distributed system (if not fully, then partially at least). E.g. Tunable consistency in Cassandra

I am a hands-on architect with proven 19+ years of experience in architecting, designing, and developing distributed software solutions for large enterprises. At Microsoft, as a Principal Software Engineering Manager, I own the Platform team. I see software development as a craft, and I am a big proponent of software architecture and clean code discipline-n-practices. I like to see the bigger picture and make a broader impact. I was also a Microsoft MVP for past 7 years on Visual Studio and Dev Technologies I can be reached at vidya_mct@yahoo.com or twitter @dotnetauthor

I am a hands-on architect with proven 19+ years of experience in architecting, designing, and developing distributed software solutions for large enterprises. At Microsoft, as a Principal Software Engineering Manager, I own the Platform team. I see software development as a craft, and I am a big proponent of software architecture and clean code discipline-n-practices. I like to see the bigger picture and make a broader impact. I was also a Microsoft MVP for past 7 years on Visual Studio and Dev Technologies I can be reached at vidya_mct@yahoo.com or twitter @dotnetauthor